Booter - Bots & Crawlers Manager

| 开发者 |

upress

ilanf haimondo |

|---|---|

| 更新时间 | 2026年2月16日 18:26 |

| PHP版本: | 4.0 及以上 |

| WordPress版本: | 6.9 |

| 版权: | GPLv2 or later |

| 版权网址: | 版权信息 |

详情介绍:

Booter - Bots & Crawlers Manager is a preventative measure (treatment in advance) and treatment of damages caused by crawlers and bots.

The plugin uses a number of existing technologies which are known by crawlers and bots and takes them one step forward - smartly and almost completely automatically.

To allow the plugin to function correctly, you must follow the instructions and manually enter some data (which must be done by a human being to avoid errors).

At the prevention level

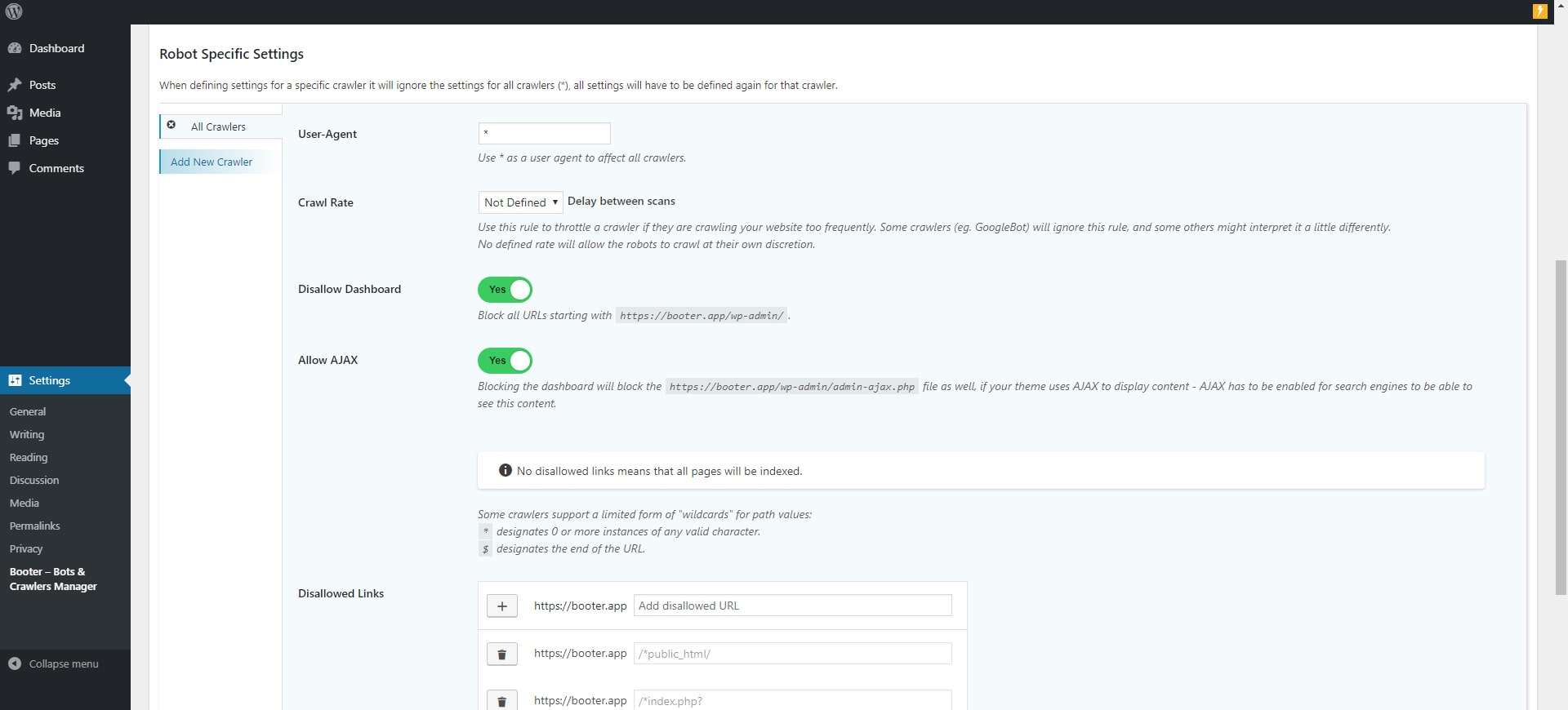

- Booter allows you to manage and create an advanced dynamic robots.txt file.

- View a 404 error log to see the most common bad links.

- Blocking bad bots that cause high server loads due to very frequent page crawls, or are used to search for security vulnerabilities.

- Booter allows you to limit the amount of requests from crawlers and bots, if or when they exceed the specified amount of requests per minute, it will be rejected for a specified period of time.

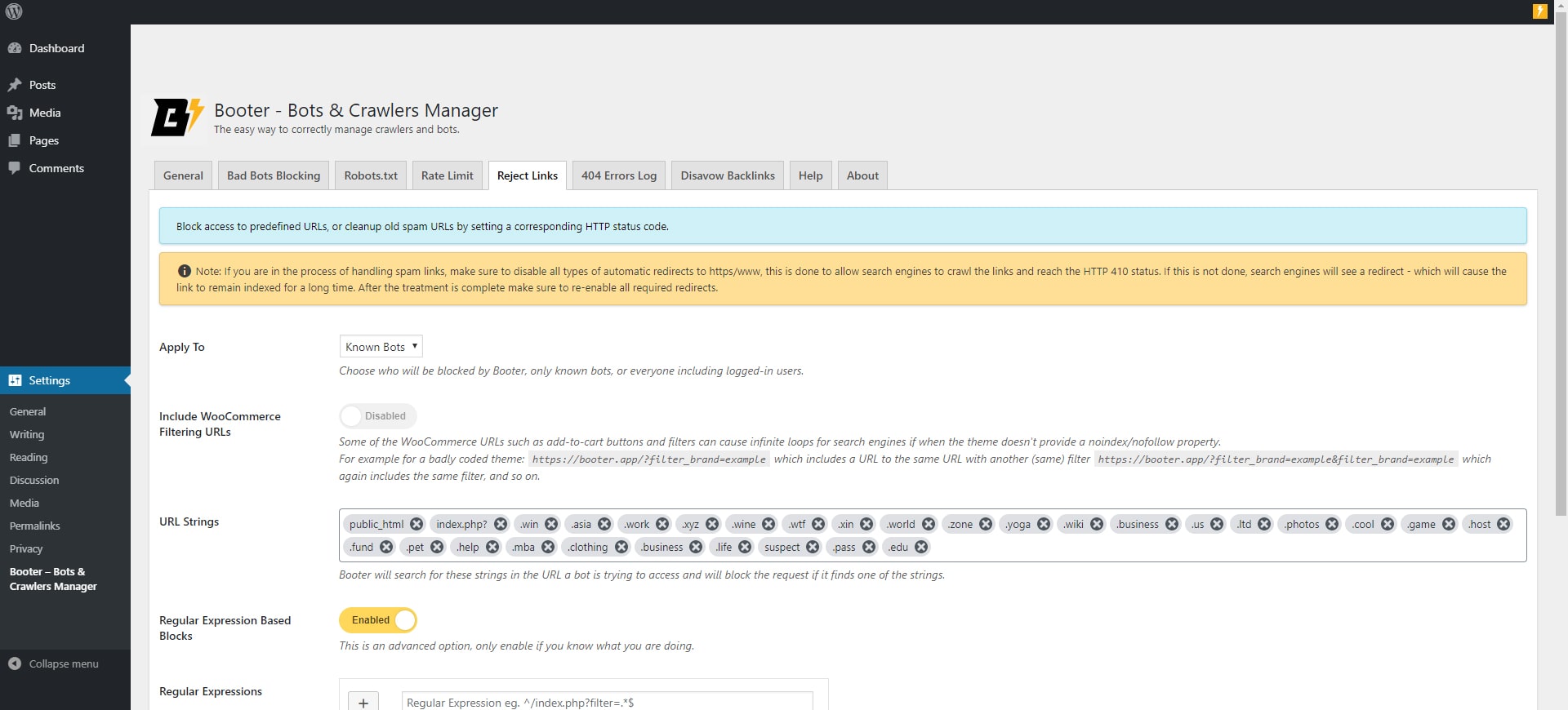

- Rejecting links that we do not want in the fastest way, not by just blocking but by sending the appropriate HTTP status code to make search engines forget them.

- Activate the plugin.

- Enable the 404 error log option.

- Set the access rate limit.

- Watch the 404 log, try to find common parts in the URLs that repeats most often.

- Enter the common parts to the "reject links" page, and ensure the rejection code is 410.

- Clear the 404 error log.

- Repeat the process once every few hours until the 404 error log remains blank.

- Check the status of your website's index coverage every few days.

安装:

- Upload

booter-crawlers-managerfolder to the/wp-content/plugins/directory - Activate the plugin through the 'Plugins' menu in WordPress

- The plugin will start rate limiting as soon as it is activated, however it is recommended to update the settings to suit your needs, under 'Settings' -> 'Booter - Crawlers Manager' menu

屏幕截图:

更新日志:

1.5.8

- Update tested up to

- Fix security issues

- Update tested up to

- Move additiona bots list to a remote list

- Fix rare crash of the UI

- Fix rate limited not properly detecting excluded useragents

- Fix scheduled task not setting properly

- Fix bots list not updating

- Fix regression introduced in version 1.5

- Added options for weekly and monthly 404 log report

- Added option to exclude user agents from rate limiting

- Updated UI components

- Updated bad bots list

- Server IP will be excluded from rate limiting by default